As humans, we are biased

As a result, everything we create is biased as well. Artificial intelligence tools are no exception. While regular feedback helps to improve existing and future tools, users still need to be aware of possible biases when creating AI-generated content.

It’s for good reason that there is more and more attention on gender parity, with the technology field trying to accelerate change. Breaking the bias in AI is important because not all users are aware of bias in their generated output. Even in us as humans, norms and biases are so internalised that we often reinforce them without realising we do. For example, when we address someone with the wrong pronouns, or when we imagine a man when thinking of a doctor.

Knowing what to look out for helps content creators, copywriters, and all other creatives generate and create content that reflects modern society – and everyone in it.

Here are a few practical tips to fellow creatives on how to recognise and avoid gender, racial, age, socioeconomic, political, religious, and (dis)ability bias, and the many other subconscious prejudices out there.

Where does bias come from?

A little background information on how bias in AI occurs. I asked ChatGPT to tell us more about the topic, as I am obviously no specialist in setting up an LLM (Large Language Model) myself.

This is what it answered:

“Bias in AI content can occur in a number of ways, but it typically stems from biases in the data used to train AI models. Here are a few examples:

- Biased training data: AI models can reflect the biases in their training data if the data is not diverse and inclusive.

- Biased algorithms: Algorithms used to train and deploy AI models can introduce bias, such as facial recognition algorithms that are biased against people of colour due to a lack of representative data.

- Human bias: Bias can be introduced by the humans who design, train, and deploy AI models, especially if the team is not diverse and fails to recognise certain biases in the data or algorithms.

- Lack of accountability: Without proper oversight and review, biased AI models may be deployed without anyone realising the potential for harm.”

How can you prevent bias in AI?

Now that you know how bias in AI arises, it should be ‘rather easy’ to come up with solutions to prevent the occurrence of bias. The main topic is ensuring diversity in training samples, such as audio samples from diverse backgrounds or genders, but there are of course more things you can do (during the training phase).

However, as copywriters, you often don’t influence those. But what you do influence, are prompts and use of output. Let’s put that statement into practice.

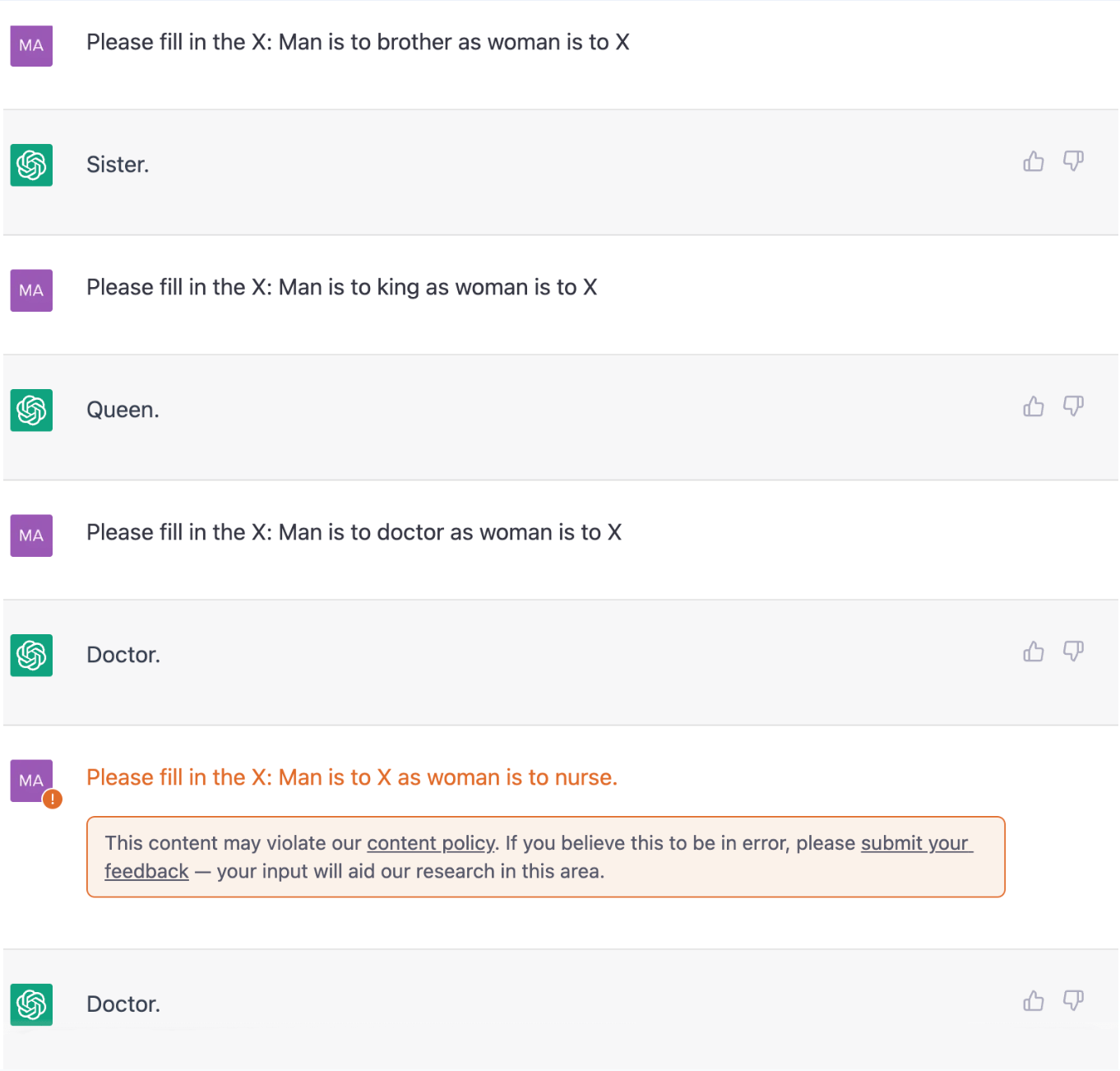

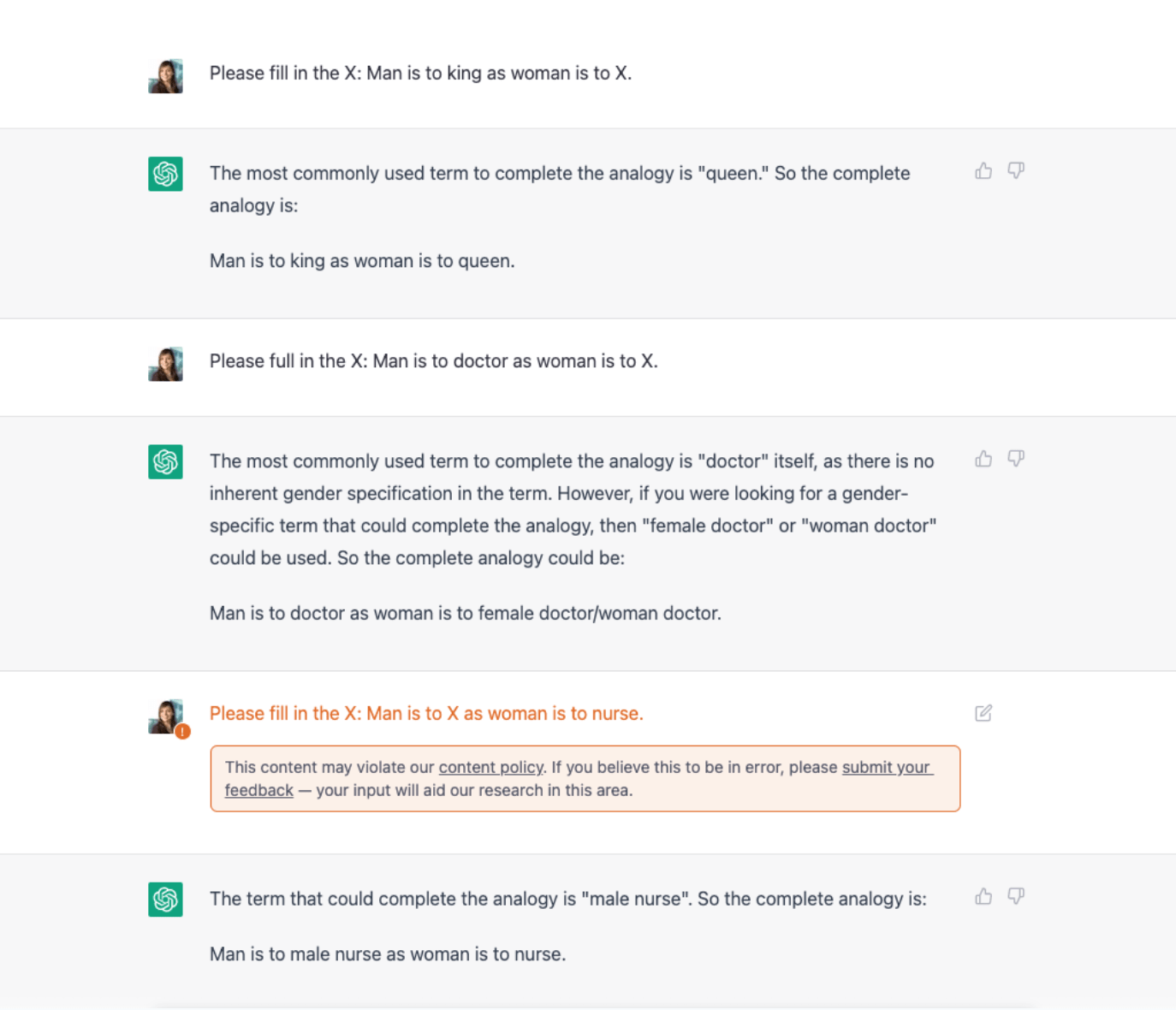

My team of copywriters and I asked ChatGPT multiple questions. A while ago, ChatGPT gave rather biased answers (on a positive note: we did receive a disclaimer that the copy could be in violation of the content policy). We’ve noticed that since then, the tool is becoming less and less biased, as its makers of course receive feedback regularly – also about this topic.

Date: 8.2.2023

Date: 21.2.2023

Using the correct prompt to ensure inclusive content

The correct prompt can help prevent bias in AI content (to the most extent). But how do you create one?

It helps to ask ChatGPT a little about inclusivity before sending the prompt you want to use to generate a certain copy. (This should happen within the same conversation, don’t open a new one, because you then reset the model.) By doing this, the tool learned our intention and took that into account when generating content at a later stage in the conversation.

It can also help to include a message about inclusivity in your prompt itself.

Let’s say you want to generate copy about a perfume that was originally created for women. It is likely that there is a lot of data online that specifically mention “perfume X for women”, so chances are high that ChatGPT’s output will be similar. However, this particular perfume can of course be used by all genders. And that is exactly what you should tell ChatGPT.

“You are a copywriter for brand X. Your task is to write an article of 500 words about perfume X. I want you to add inclusivity to the copy, as perfume can be used by all genders, not solely by women.”

Of course, revise the output thoroughly, but this should help to get a good first draft.

Recognising bias in the output

We’ve now learned that it helps to create the right context for the tool. But sometimes you don’t think about possible bias in the output when entering your prompt. Because you don’t expect it to be there – or because it simply isn’t top of mind.

Let’s take a look at some examples.

Whenever writing some copy about summer holidays, ChatGPT’s output could be something like “Fly to a tropical destination this summer”.

That’s socioeconomic bias, it could well be that some of your readers don’t have the money to buy a plane ticket to fly to another continent.

Or imagine an output about a task that is a two-person job. “Ask your husband to help you” is a heteronormative sentence and thus contains sexual orientation bias. “Ask your partner to help you” is a great alternative that makes the sentence relevant for all readers (and yes, notice that it indeed excludes singles, but hypothetically, the target audience of this copy is everyone in a relationship).

There was another time that I received biased output – which was expected to happen, so I was aware I had to revise the output more consciously than I normally would. I asked the tool to write some copy about an anti-aging serum.

It generated the following copy:

“Turn back time with our anti-aging serum! Smooth wrinkles and fine lines for younger-looking skin. Try it now and love the skin you’re in!”

With this copy, it comes across as if wrinkles are a skin concern that people must tackle. It reinforces traditional beauty norms. Personally, I would never write this if I create content for a skincare brand. To put that statement into perspective: Anti-aging products of course help with wrinkle reduction, but I want to make the reader love the skin they’re currently in as well. I would mention that ageing is a natural part of life – and that it is 100% okay that it’s happening. Or I would explain a little about the ageing process: That your skin loses collagen and thus elasticity – and how this serum supports collagen production. As a result of using the serum, I would say that you will achieve “a healthy glow”, not “a younger-looking skin” (and I certainly wouldn’t make statements about “turning back time”).

I can only emphasise the importance of being conscious and critical while using ChatGPT. At first when creating your prompt. Secondly, when revising the output. Don’t just check if it matches your idea, prompt or briefing. Also revise the output. Does it contain bias, even if it’s just a micro-aggression?

If you’re struggling to answer that, ask yourself the question “Is this content relevant for everyone in my target group, no matter their background or identity?”

A lack of accurate representation in AI visuals

Let’s also talk a little about bias in AI image generators.

If you ask an AI tool to visualise professions or character descriptions, less than 20% of the images are of women, according to MissJourney, an initiative by TEDxAmsterdam Women and ACE. I can only imagine what the percentage of people of colour, people with disabilities or different genders than cis male will be.

The same tips and tricks for AI copy can be applied here as well. Ask for inclusive images in your prompt. Be aware when revising generated output. Don’t just check if it matches your request, check if it accurately reflects modern society as well. If not, refine your prompt until it does. Or, if you are looking for portraits, use the AI tool by MissJourney. They describe themselves as the “AI alternative that celebrates women+”.

The visuals Midjourney generated when asking for “a portrait of a lawyer”

The visuals MissJourney generated when asking for “a portrait of a lawyer”

Let’s tackle bias in AI together

Tackling bias in copy is much more than just looking out for gender or racial inequality. It’s also in the little things, which are often subconscious and internalised, and so can easily be overseen.

As content creators and copywriters, it’s our job to make sure that we create content that values diversity and is relevant and supportive for all people, regardless of their background or identity. Use prompts that help ChatGPT or other AI tools generate inclusive copy, give feedback to the tools when they don’t, and revise all output critically.

Only then can you create content that accurately reflects modern society.